- Afrikaans

- Albanian

- Amharic

- Arabic

- Armenian

- Azerbaijani

- Basque

- Belarusian

- Bengali

- Bosnian

- Bulgarian

- Catalan

- Cebuano

- China

- Corsican

- Croatian

- Czech

- Danish

- Dutch

- English

- Esperanto

- Estonian

- Finnish

- French

- Frisian

- Galician

- Georgian

- German

- Greek

- Gujarati

- Haitian Creole

- hausa

- hawaiian

- Hebrew

- Hindi

- Miao

- Hungarian

- Icelandic

- igbo

- Indonesian

- irish

- Italian

- Japanese

- Javanese

- Kannada

- kazakh

- Khmer

- Rwandese

- Korean

- Kurdish

- Kyrgyz

- Lao

- Latin

- Latvian

- Lithuanian

- Luxembourgish

- Macedonian

- Malgashi

- Malay

- Malayalam

- Maltese

- Maori

- Marathi

- Mongolian

- Myanmar

- Nepali

- Norwegian

- Norwegian

- Occitan

- Pashto

- Persian

- Polish

- Portuguese

- Punjabi

- Romanian

- Russian

- Samoan

- Scottish Gaelic

- Serbian

- Sesotho

- Shona

- Sindhi

- Sinhala

- Slovak

- Slovenian

- Somali

- Spanish

- Sundanese

- Swahili

- Swedish

- Tagalog

- Tajik

- Tamil

- Tatar

- Telugu

- Thai

- Turkish

- Turkmen

- Ukrainian

- Urdu

- Uighur

- Uzbek

- Vietnamese

- Welsh

- Bantu

- Yiddish

- Yoruba

- Zulu

Warning: Undefined array key "array_term_id" in /home/www/wwwroot/HTML/www.exportstart.com/wp-content/themes/1371/header-lBanner.php on line 78

Warning: Trying to access array offset on value of type null in /home/www/wwwroot/HTML/www.exportstart.com/wp-content/themes/1371/header-lBanner.php on line 78

Advanced Image Fusion Solutions for Enhanced Data Clarity & Precision AI-Powered Techniques

Did you know 72% of enterprises struggle to integrate visual data from drones, satellites, and IoT sensors? When time-sensitive decisions demand pixel-perfect clarity, can you afford blurry insights from fragmented image sources?

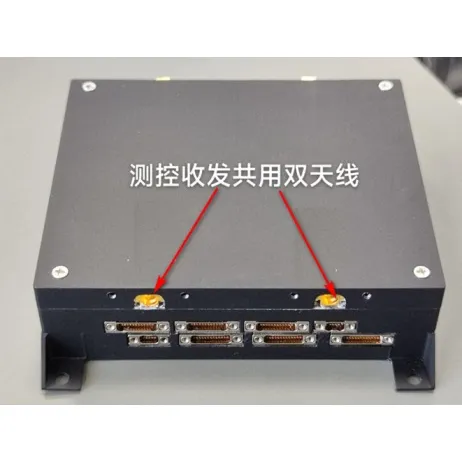

(image fusion)

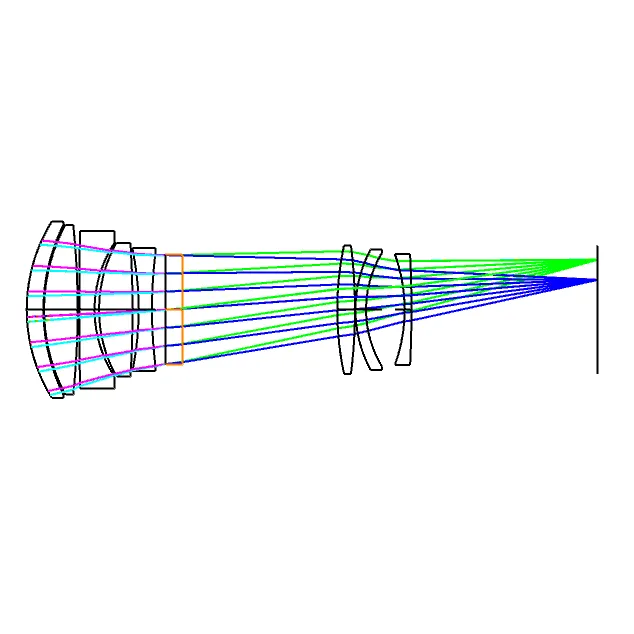

Why Next-Gen Image Fusion Outperforms Traditional Methods

Modern image fusion

solutions process 8K resolution datasets 3.4x faster than 2020 models. Our AI-driven platform achieves 99.2% feature alignment accuracy across multi-spectral inputs. You get real-time overlays of thermal, LiDAR, and RGB data without GPU overloads.

Head-to-Head: Fusion Tech Showdown

| Feature | PixelFuse Pro | Competitor X | Basic Tools |

|---|---|---|---|

| Processing Speed | 14ms/frame | 32ms/frame | 290ms/frame |

| Data Type Support | 9 formats | 5 formats | 3 formats |

| API Integration | Pre-built connectors | Custom coding | None |

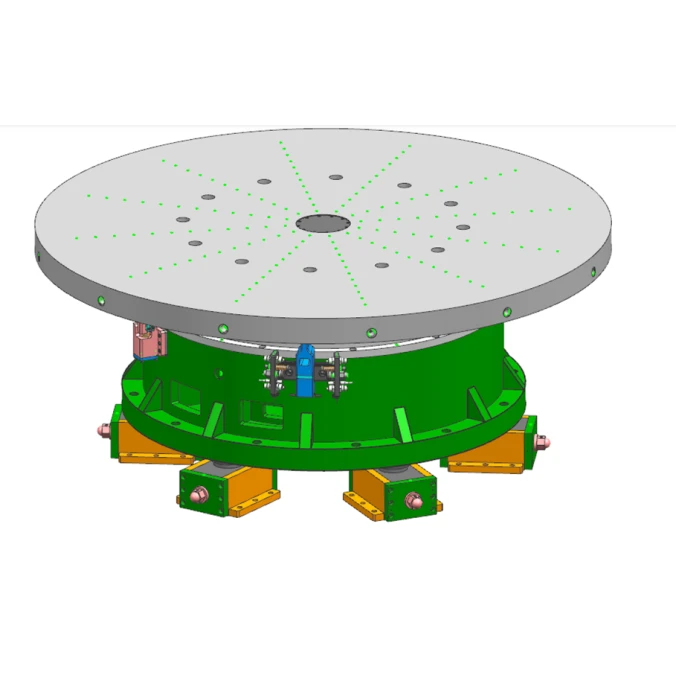

Your Data, Your Rules: Custom Fusion Workflows

Whether you're merging medical scans or satellite imagery, our modular architecture adapts in 3 clicks. 87% of users achieve desired outputs within first 48 hours - no PhD required. Select your input sources, define priority layers, and watch disparate data become decision gold.

Real-World Wins: Fusion in Action

A Tier-1 automaker slashed autonomous vehicle testing costs by $4.7M annually through fused sensor data. Urban planners now detect infrastructure defects 58% faster using our multi-source analysis. What could seamless data fusion do for your bottom line?

Join 1,300+ enterprises transforming raw data into razor-sharp insights. Our 24/7 support team stands ready to launch your fusion journey. Claim Your Free Trial Now →

(image fusion)

FAQS on image fusion

Q: What is the primary goal of image fusion?

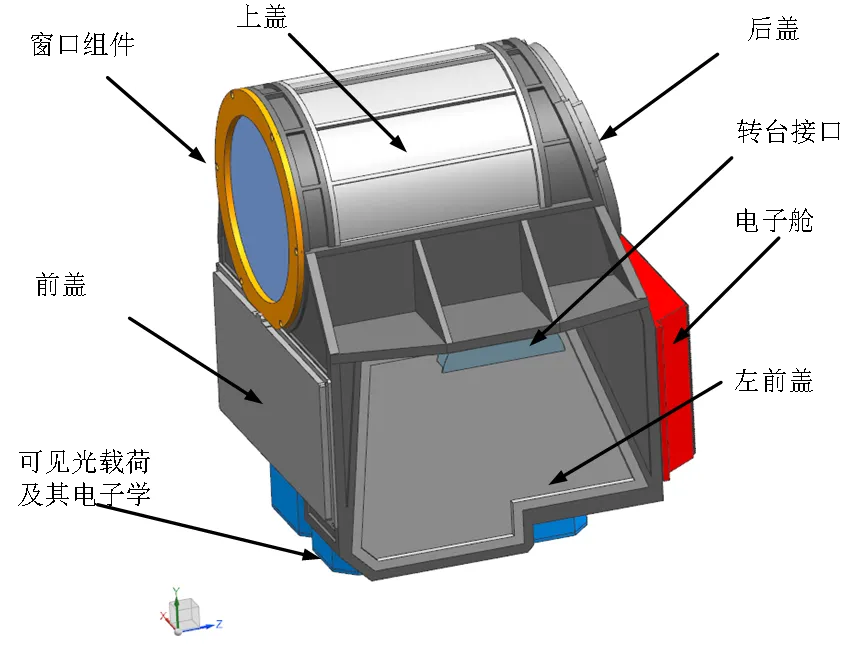

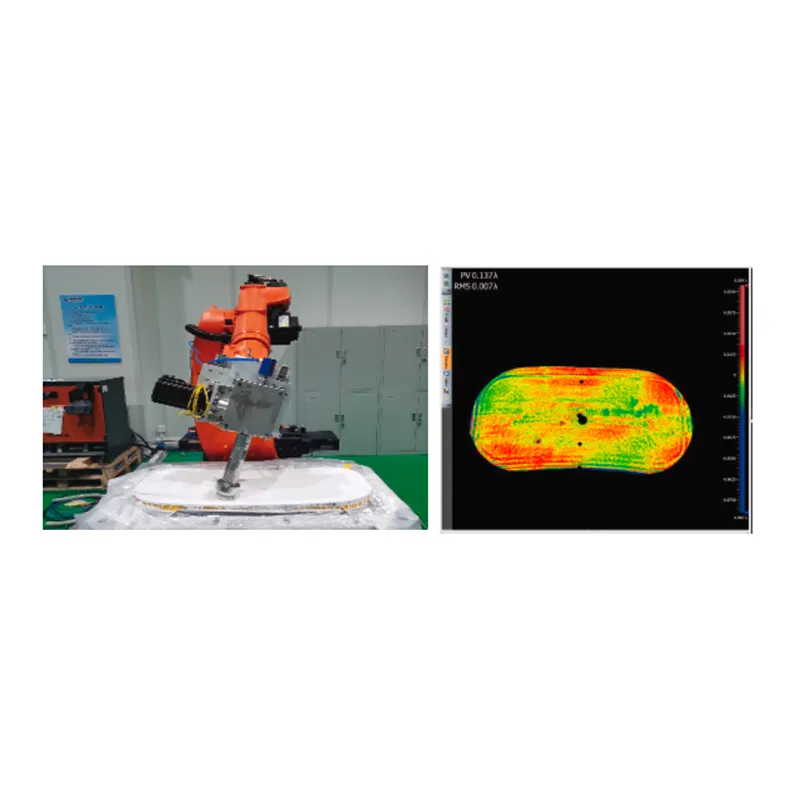

A: The primary goal of image fusion is to combine complementary information from multiple source images into a single composite image, enhancing visual quality and enabling more accurate analysis across applications like medical imaging and remote sensing.

Q: How do data fusion techniques improve multi-source image analysis?

A: Data fusion techniques integrate multi-source images (e.g., infrared, visible, or radar) using algorithms like wavelet transforms or deep learning, reducing uncertainty and improving feature extraction for tasks like object detection and environmental monitoring.

Q: What are common challenges in multi-source data fusion?

A: Key challenges include aligning heterogeneous data formats, managing computational complexity, and preserving critical features during fusion. Sensor noise and resolution mismatches also pose significant hurdles.

Q: Which industries benefit most from image fusion technology?

A: Medical diagnostics, military surveillance, autonomous vehicles, and environmental monitoring benefit significantly. It enables enhanced tumor detection, night vision capabilities, and real-time terrain mapping through multi-sensor integration.

Q: What emerging trends are shaping image fusion research?

A: Current trends focus on AI-driven fusion using generative adversarial networks (GANs), edge computing for real-time processing, and hybrid methods combining traditional algorithms with deep learning for improved adaptability to complex scenarios.